Intel Data Center GPU Max Series

Maximize impact with the Intel Data Center GPU Max Series, Intel’s highest performing, highest density, general-purpose discrete GPU, which packs over 100 billion transistors into one package and contains up to 128 Xe Cores–Intel’s foundational GPU compute building block.

Click here to jump to more pricing!

Overview:

When deploying GPUs in a high-performance computing (HPC) environment, customers face substantial obstacles and inefficiencies caused by the need to port and refactor code. Their efforts are further hampered by proprietary GPU programming environments that prohibit portability between GPU vendors and often result in inconsistency between CPU and GPU implementations. The need for GPU-level memory bandwidth, at scale, and sharing code investments between CPUs and GPUs for running a majority of the workloads in a highly parallelized environment has become essential.

Intel Data Center GPU Max Series is designed for breakthrough performance in dataintensive computing models used in AI and HPC. Based on the Xe HPC architecture that uses both EMIB 2.5D and Foveros packaging technologies to combine 47 active tiles onto a single GPU, fabricated on five different process nodes, Intel Max Series GPUs enable greater flexibility and modularity in the construction of the SOC.

The Intel Xeon CPU Max Series supercharges Intel Xeon Scalable processors with high bandwidth memory (HBM) and is architected to unlock performance and speed discoveries in data-intensive workloads, such as modeling, artificial intelligence, deep learning, high performance computing (HPC) and data analytics.

Key Features

The Intel Data Center GPU Max Series is designed to take on the most challenging high-performance computing (HPC) and AI workloads. The Intel Xe Link high-speed, coherent, unified fabric offers flexibility to run any form factor to enable scale up and scale out.

Up to 408MB of L2 Cache

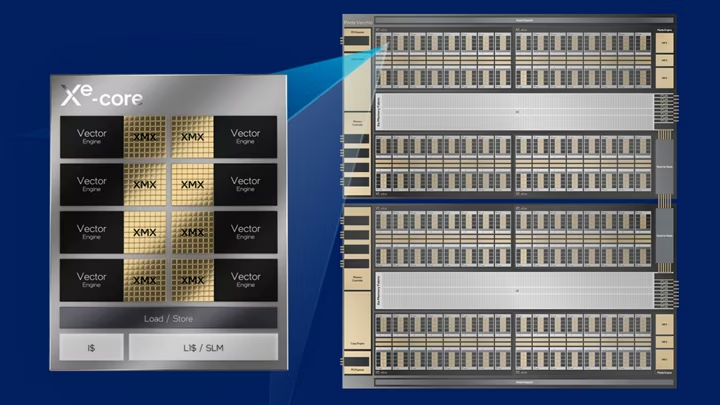

Ensure high capacity and high bandwidth with up to 408 MB of L2 cache (Rambo) based on discrete SRAM technology and 64 MB of L1 cache plus up to 128 GB of high-bandwidth memory.

Built-in Ray Tracing Acceleration

Accelerate scientific visualization and animation with up to 128 ray tracing units incorporated on each Intel® Max Series GPU.

Intel Xe Matrix Extensions (XMX)

Accelerate AI workloads and enable vector and matrix capabilities in a single device with Intel® Xe Matrix Extensions (XMX) built with deep systolic arrays.

Maximize Impact

The Intel Data Center GPU Max Series accelerates science and discovery with breakthrough performance.

Up to

2x

performance gain on HPC and AI workloads over competition due to the Intel Max Series GPU large L2 cache.1

Up to

12.8x

performance gain over 3rd Gen Intel Xeon processors on LAMMPS workloads running on Intel Max Series CPUs with kernels offloaded to six Intel Max Series GPUs, optimized by Intel® oneAPI tools.2

Up to

256x

Int8 operations per clock. Speed AI training and inference with up to 256 Int8 operations per clock with the built-in Intel® XMX.

Solving the world’s most challenging problems…faster

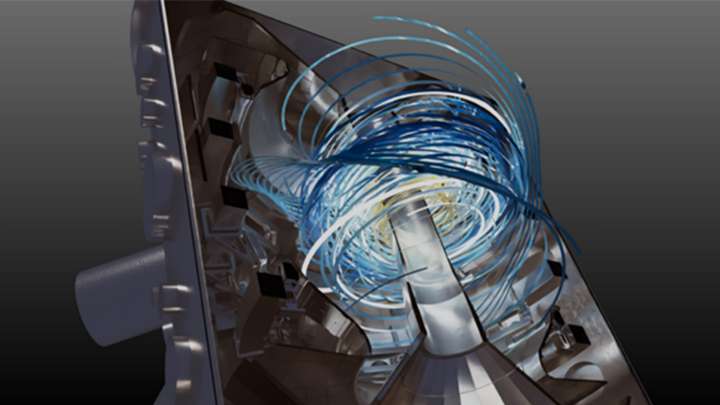

Increased density and compute power is helping researchers solve problems currently out of reach – for example, creating a 3D map of a mouse brain, or modeling patient-specific blood flow to determine where to insert a heart stent.

The U.S. Department of Energy’s Aurora Supercomputer at Argonne National Laboratory (ANL) is expected to be one of the industry’s first supercomputers to feature over 1 exaflop of sustained double-precision performance and over 2 exaflops of peak double-precision performance. Aurora will also be the first to showcase the power of pairing Max Series GPUs and CPUs in a single system, with more than 10,000 blades, each containing six Max Series GPUs and two Xeon Max CPUs.

Accelerating HPC and AI Workloads Across Multiple Architectures

AI models continuously require larger data sets for more effective training. The faster you can process the data, the faster you can train and deploy the model. The GPU accelerates end-to-end AI and data analytics pipelines with libraries optimized for Intel architectures and configurations tuned for HPC and AI workloads, high-capacity storage and high-bandwidth memory.

The entire Intel Max Series product family is unified by oneAPI for a common, open, standards-based programming model to unleash productivity and performance. Intel oneAPI tools include advanced compilers, libraries, profilers and code migration tools to easily migrate CUDA code to open C++ with SYCL. Using oneAPIoptimized deep learning frameworks and machine learning libraries, developers can realize drop-in acceleration for data analytics and machine learning workflows.

This easy-to-deploy, open-standards approach reduces development time, complexity and cost, and enables developers to overcome the constraints of proprietary environments that limit code portability.

Software and Tools

Discover the open software solutions that are enabling the industry’s most flexible GPUs, including code migration tools and advanced compilers, libraries, profilers, and optimized AI frameworks.

The Intel HPC Story

Intel’s industry-leading HPC portfolio was designed to fuel the next generation of computing innovation. Optimized to deliver breakthroughs across a range of factors, these technologies help innovators blast through barriers and solve complex problems in entirely new ways.

15+ OEM Designs

1 Visit intel.com/performanceindex (Events: Supercomputing 22) for workloads and configurations. Results may vary.

2 LAMMPS (Atomic Fluid, Copper, DPD, Liquid_crystal, Polyethylene, Protein, Stillinger-Weber, Tersoff, Water)