Intel Arc GPU for the Edge

Supercharge Edge AI, Graphics, and Media Processing with Intel Arc Graphics discrete GPU.

Boost Performance of Edge Workloads

Graphics

For immersive visual experiences.

AI Inferencing

Deploy advanced AI workloads at the edge with specialized AI engines.

Media Processing & Delivery

Enhance video production, media transcoding, and streaming.

Intel Arc A750E Performance Compared to Nvidia

Up to

84%

better media processing than the RTX 4070-S

Up to

28%

better AI performance than the RTX 4070-S

Up to

22%

better Perf/W than RTX 4070-S

What You Can Do With Intel Arc GPU

Dedicated LLM Processing and Video Analytics with Intel Arc GPU Transforms Charging Station Intelligence

The powerful combination of Intel Arc GPU, Intel Core processor, and the developer-friendly OpenVINO toolkit is revolutionizing self-service and EV Charging. DFI integrates LLM-powered self-service charging stations with interactive digital signage, supporting various operating systems with virtualization technology. With these innovative technologies, EV charging becomes more than just refueling – it transforms into an interactive, personalized, and revenue-generating experience.

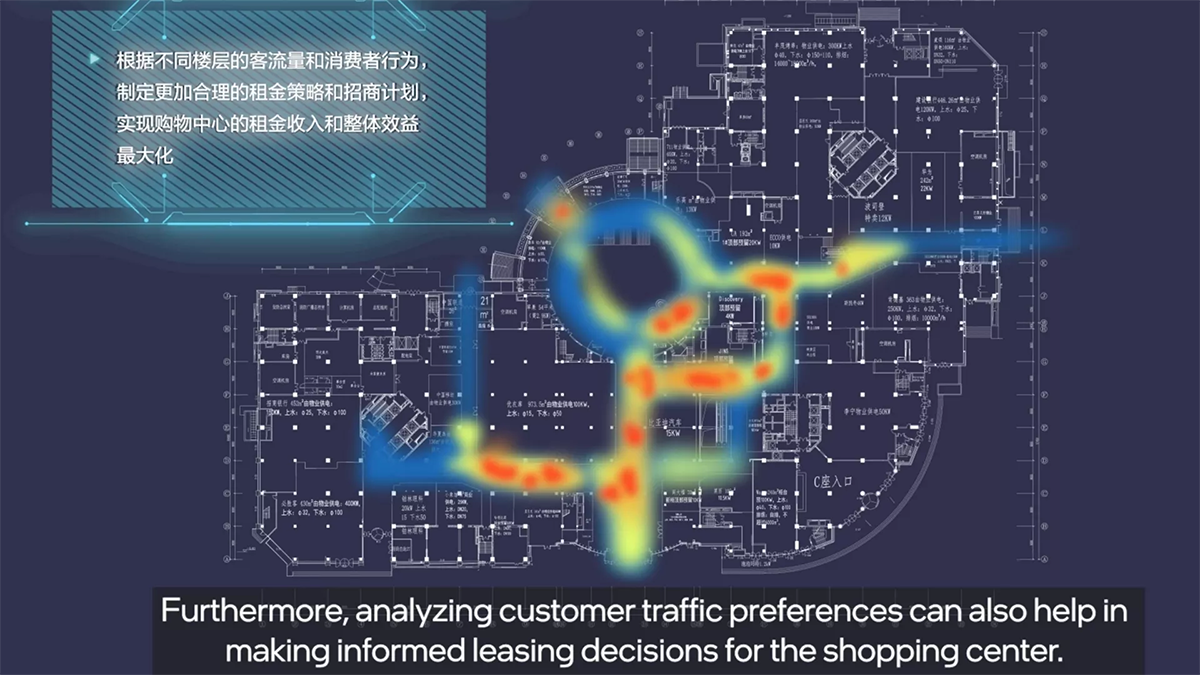

The Future of Shopping: How Malls Are Using AI for Smarter Operations

CUE ushers in a new era of smart retail with Intel Arc GPU for AI inference. By analyzing specific foot traffic patterns in every floor, and consumer preferences for different business types such as dining and retail, Maples Center gains insights into customers’ preferences and can optimize store layouts mix of business types, adjust product offerings, and refine marketing strategies, thereby enhancing the shopping center's appeal, customer satisfaction, and ultimately, sales.

Power Advanced Video Walls with Matrox LUMA Pro Series Graphics Cards

Deliver exceptional display density, resolution, and performance for demanding multi-monitor video wall applications with Matrox LUMA Pro Series graphics cards, powered by the Intel Arc GPU.

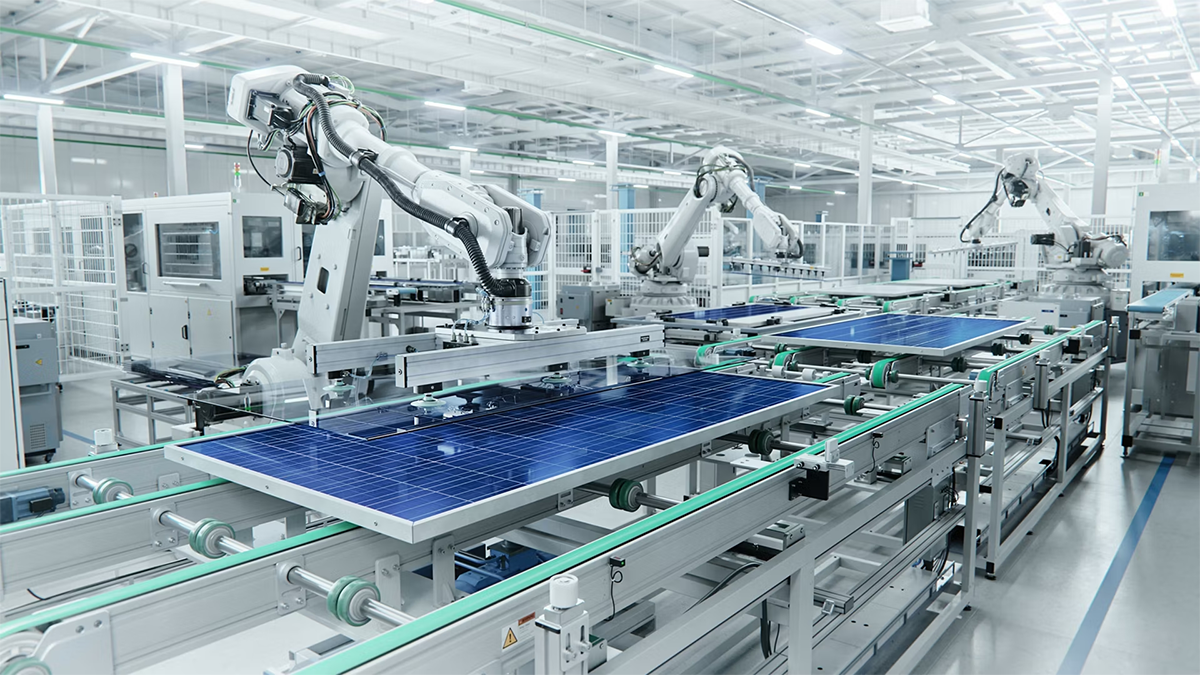

Improve Manufacturing Efficiency with ADLINK's High-Accuracy AOI

The ADLINK automated optical inspection (AOI) solution is built with an Intel CPU, Intel GPUs, and ADLINK AI models that were optimized by OpenVINO toolkit. The solution provides highly accurate visual inference at scale to reduce overhead costs of quality assurance in manufacturing.

Browse Intel Arc GPUs

Intel Arc A310E Graphics Card

- Xe-cores: 6

- GPU Peak TOPS (Int8): 49

- Power: 75 W TBP

- Memory Size: 4 GB GDDR6

- Graphics Memory Bandwidth: 124 GB/s

Intel Arc A350E Graphics Card

- Xe-cores: 6

- GPU Peak TOPS (Int8): 28

- Power: 25-35 W TGP

- Memory Size: 4 GB GDDR6

- Graphics Memory Bandwidth: 112 GB/s

Intel Arc A370E Graphics Card

- Xe-cores: 8

- GPU Peak TOPS (Int8): 38

- Power: 35-50 W TGP

- Memory Size: 4 GB GDDR6

- Graphics Memory Bandwidth: 112 GB/s

Intel Arc A380E Graphics Card

- Xe-cores: 8

- GPU Peak TOPS (Int8): 66

- Power: 75 W TBP

- Memory Size: 6 GB GDDR6

- Graphics Memory Bandwidth: 186 GB/s

Intel Arc A580E Graphics Card

- Xe-cores: 24

- GPU Peak TOPS (Int8): 197

- Power: 185 W TBP

- Memory Size: 16 GB GDDR6

- Graphics Memory Bandwidth: 512 GB/s

Intel Arc A750E Graphics Card

- Xe-cores: 28

- GPU Peak TOPS (Int8): 229

- Power: 225 W TGP

- Memory Size: 16 GB GDDR6

- Graphics Memory Bandwidth: 512 GB/s

Partner GPU Cards for the Edge

Accelerating AI Inference for Developers

With OpenVINO toolkit's optimization techniques, such as model quantization, layer fusion, and hardware-level optimizations, you can significantly enhance the efficiency of AI inference. When deployed on a discrete GPU, these optimized models can leverage the parallel processing capabilities of the GPU, resulting in faster inference speeds. The Automatic Device Plugin (AUTO) allows for inference requests to be made based on the optimal CPU or GPU resource available, prioritizing latency or performance as needed.

Maintaining cost-efficiency while achieving exceptional GPU performance is made possible with OpenVINO. The latest OpenVINO 2024.0 release makes generative AI more accessible for real world scenarios with added broader model support, reduced memory usage, and the introduction of additional compression techniques for large language models (LLMs). Plus, developers now have more flexibility working with their framework of choice. Download today and start creating AI solutions.